Clustering Grocery Items

Project Description

This project is the 9th problem at Take-Home Data Science Challenge.

Company XYZ is an online grocery store. In the current version of the website, they have manually grouped the items into a few categories based on their experience. However, they now have a lot of data about user purchase history. Therefore, they would like to put the data into use!

This is what they asked you to do:

- The company founder wants to meet with some of the best customers to go through a focus group with them. You are asked to send the ID of the following customers to the founder:

- the customer who bought the most items overall in her lifetime

- for each item the customer who bought that product the most

- Cluster items based on user co-purchase history. That is, create clusters of products that have the highest probability of being bought together. The goal of this is to replace the old/manually created categories with these new ones. Each item can belong to just one cluster.

Data Loading

import pandas as pd

purchase=pd.read_csv("grocery/purchase_history.csv")

items = pd.read_csv("grocery/item_to_id.csv")

purchase.head()

| user_id | id | |

|---|---|---|

| 0 | 222087 | 27,26 |

| 1 | 1343649 | 6,47,17 |

| 2 | 404134 | 18,12,23,22,27,43,38,20,35,1 |

| 3 | 1110200 | 9,23,2,20,26,47,37 |

| 4 | 224107 | 31,18,5,13,1,21,48,16,26,2,44,32,20,37,42,35,4... |

Data Manipulation

The dataset we have right now it eh user_id with the ids of items they bought. However, for each user, its item record is aggregated into strings. So first, we need extract info from it. The best way is to generate a table with each user and each product.

I have detailed the related techniques in the last blog. Long to Wide

If you cannot understand why I did it, please go the last post.

unique_items = set([int(item) for rows in purchase.id.str.split(",") for item in rows])

items_dict = dict.fromkeys(unique_items,0)

def frequency(df):

ids = df.id.str.split(",").sum()

items_dict = dict.fromkeys(unique_items,0)

for i in ids:

items_dict[int(i)] = items_dict.get(int(i),0)+1

return pd.Series(items_dict)

users = purchase.groupby("user_id").apply(frequency)

users.head()

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ... | 39 | 40 | 41 | 42 | 43 | 44 | 45 | 46 | 47 | 48 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| user_id | |||||||||||||||||||||

| 47 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 0 |

| 68 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | ... | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| 113 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | ... | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 |

| 123 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 223 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

5 rows × 48 columns

users.columns.name = "Items"

users.head()

| Items | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ... | 39 | 40 | 41 | 42 | 43 | 44 | 45 | 46 | 47 | 48 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| user_id | |||||||||||||||||||||

| 47 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 0 |

| 68 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | ... | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| 113 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | ... | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 |

| 123 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 223 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

5 rows × 48 columns

Which customer buy most?

users.sum(axis=1).sort_values(ascending=False).head()

user_id

269335 72

367872 70

599172 64

397623 64

377284 63

dtype: int64

Customer 269335 buys the most

For each item, which customer buy most?

most_purchases = pd.concat([users.T.max(axis=1),users.T.idxmax(axis=1)],axis=1)

most_purchases.columns = ['Count','UserID']

most_purchases

| Count | UserID | |

|---|---|---|

| Items | ||

| 1 | 4 | 31625 |

| 2 | 5 | 31625 |

| 3 | 4 | 154960 |

| 4 | 3 | 5289 |

| 5 | 3 | 217277 |

| 6 | 4 | 334664 |

| 7 | 3 | 175865 |

| 8 | 3 | 151926 |

| 9 | 4 | 269335 |

| 10 | 4 | 618914 |

| 11 | 3 | 367872 |

| 12 | 3 | 557904 |

| 13 | 4 | 653800 |

| 14 | 3 | 172120 |

| 15 | 3 | 143741 |

| 16 | 3 | 73071 |

| 17 | 4 | 366155 |

| 18 | 5 | 917199 |

| 19 | 3 | 31625 |

| 20 | 4 | 885474 |

| 21 | 4 | 884172 |

| 22 | 4 | 1199670 |

| 23 | 5 | 920002 |

| 24 | 3 | 189913 |

| 25 | 4 | 68282 |

| 26 | 4 | 967573 |

| 27 | 4 | 956666 |

| 28 | 4 | 204624 |

| 29 | 4 | 394348 |

| 30 | 2 | 21779 |

| 31 | 3 | 289360 |

| 32 | 4 | 109578 |

| 33 | 3 | 1310207 |

| 34 | 4 | 305916 |

| 35 | 3 | 450482 |

| 36 | 4 | 269335 |

| 37 | 4 | 46757 |

| 38 | 4 | 255546 |

| 39 | 5 | 599172 |

| 40 | 4 | 38872 |

| 41 | 4 | 133355 |

| 42 | 4 | 80215 |

| 43 | 4 | 996380 |

| 44 | 4 | 31625 |

| 45 | 5 | 1198106 |

| 46 | 4 | 1218645 |

| 47 | 4 | 384935 |

| 48 | 3 | 335841 |

First apply association rules

To find product-wise probability to buy together

binary = users.applymap(lambda x:0 if x==0 else 1)

binary.head()

| Items | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ... | 39 | 40 | 41 | 42 | 43 | 44 | 45 | 46 | 47 | 48 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| user_id | |||||||||||||||||||||

| 47 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 0 |

| 68 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | ... | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| 113 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | ... | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 |

| 123 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 223 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

5 rows × 48 columns

Another association rules applied project goes here: click me

from mlxtend.frequent_patterns import apriori

from mlxtend.frequent_patterns import association_rules

frequent_itemsets = apriori(binary, min_support=0.02, use_colnames=True)

rules = association_rules(frequent_itemsets, support_only=True,min_threshold=0.01)

rules = rules[["antecedents","consequents","support"]]

rules=rules[(rules['antecedents'].apply(len)==1)&(rules['consequents'].apply(len)==1)]

rules.antecedents=rules.antecedents.apply(lambda x: [item for item in x][0])

rules.consequents=rules.consequents.apply(lambda x: [item for item in x][0])

rules.support = rules.support.apply(lambda x: 1-x)

rules.head()

| antecedents | consequents | support | |

|---|---|---|---|

| 0 | 1 | 2 | 0.802090 |

| 1 | 2 | 1 | 0.802090 |

| 2 | 1 | 3 | 0.878843 |

| 3 | 3 | 1 | 0.878843 |

| 4 | 1 | 4 | 0.940526 |

rules.support.describe()

count 2182.000000

mean 0.924455

std 0.036978

min 0.792968

25% 0.905636

50% 0.925779

75% 0.956470

max 0.979988

Name: support, dtype: float64

Using pivot table to convert to similarity matrix and try clustering

- AgglomerativeClustering is a hierachical method.

- DBSCAN is a density-based clustering method.

- Spectral Clusteirng works for the similarity matrix(affinity matrix).

- KMeans is most common used clustering method based on partition.

from sklearn.cluster import AgglomerativeClustering

from sklearn.cluster import DBSCAN

from sklearn.cluster import SpectralClustering

from sklearn.cluster import KMeans

data_matrix = rules.pivot_table(index = "antecedents",columns="consequents",values = "support").fillna(0)

#model = AgglomerativeClustering(affinity='precomputed', n_clusters=10, linkage='average').fit(data_matrix)

#model = DBSCAN(metric="precomputed",eps=0.1).fit(data_matrix)

#model = SpectralClustering(n_clusters = 11,affinity='precomputed').fit(data_matrix)

model = KMeans(n_clusters = 10).fit(data_matrix)

labels = pd.DataFrame({"label":pd.Series(model.labels_),"Item_id":list(range(1,49))})

results=items.merge(labels,on='Item_id').sort_values("label")

results['Index']=results.groupby('label').cumcount()+1

results

| Item_name | Item_id | label | Index | |

|---|---|---|---|---|

| 12 | eggs | 14 | 0 | 1 |

| 15 | butter | 8 | 0 | 2 |

| 14 | yogurt | 48 | 0 | 3 |

| 13 | milk | 16 | 0 | 4 |

| 11 | cheeses | 21 | 0 | 5 |

| 41 | sandwich bags | 7 | 1 | 1 |

| 40 | aluminum foil | 15 | 1 | 2 |

| 39 | toilet paper | 33 | 1 | 3 |

| 38 | paper towels | 24 | 1 | 4 |

| 20 | waffles | 5 | 2 | 1 |

| 19 | pasta | 31 | 2 | 2 |

| 0 | coffee | 43 | 3 | 1 |

| 36 | laundry detergent | 18 | 3 | 2 |

| 37 | dishwashing | 27 | 3 | 3 |

| 3 | soda | 9 | 3 | 4 |

| 2 | juice | 38 | 3 | 5 |

| 1 | tea | 23 | 3 | 6 |

| 28 | cherries | 25 | 4 | 1 |

| 34 | cucumbers | 42 | 4 | 2 |

| 33 | cauliflower | 45 | 4 | 3 |

| 32 | carrots | 10 | 4 | 4 |

| 31 | broccoli | 44 | 4 | 5 |

| 30 | apples | 32 | 4 | 6 |

| 29 | grapefruit | 20 | 4 | 7 |

| 35 | lettuce | 2 | 4 | 8 |

| 27 | berries | 40 | 4 | 9 |

| 23 | poultry | 6 | 4 | 10 |

| 25 | pork | 47 | 4 | 11 |

| 4 | sandwich loaves | 39 | 4 | 12 |

| 5 | dinner rolls | 37 | 4 | 13 |

| 6 | tortillas | 34 | 4 | 14 |

| 7 | bagels | 13 | 4 | 15 |

| 26 | bananas | 46 | 4 | 16 |

| 47 | pet items | 3 | 4 | 17 |

| 18 | sugar | 1 | 4 | 18 |

| 24 | beef | 17 | 4 | 19 |

| 46 | baby items | 4 | 5 | 1 |

| 16 | cereals | 11 | 6 | 1 |

| 17 | flour | 30 | 6 | 2 |

| 10 | ketchup | 41 | 7 | 1 |

| 9 | spaghetti sauce | 26 | 7 | 2 |

| 8 | canned vegetables | 28 | 7 | 3 |

| 42 | shampoo | 12 | 8 | 1 |

| 43 | soap | 35 | 8 | 2 |

| 44 | hand soap | 29 | 8 | 3 |

| 45 | shaving cream | 19 | 8 | 4 |

| 21 | frozen vegetables | 22 | 9 | 1 |

| 22 | ice cream | 36 | 9 | 2 |

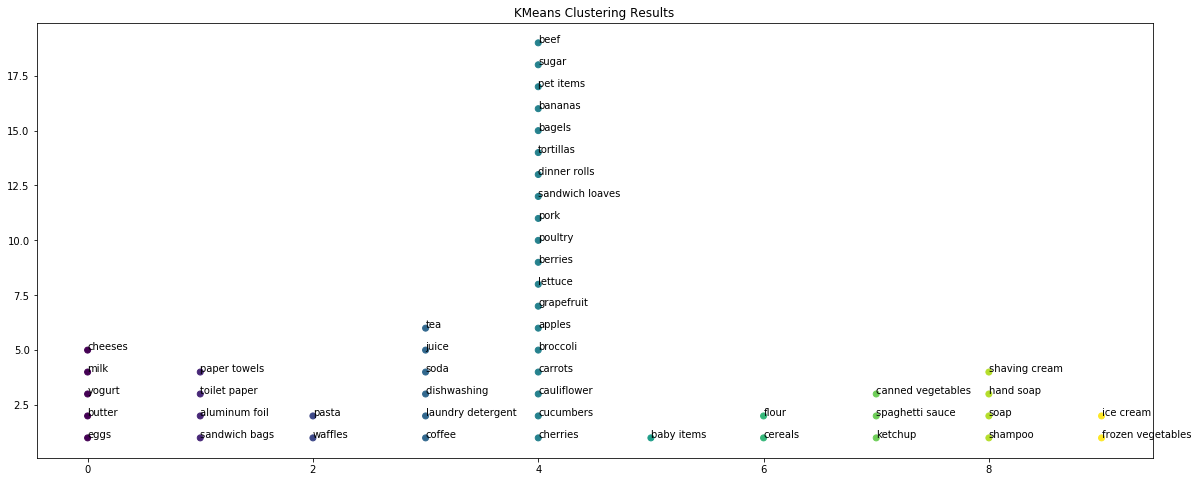

Visualizing the clustering

import matplotlib.pyplot as plt

#plt.figure(figsize=(20,8))

#plt.scatter(results.label,results.Index)

fig, ax = plt.subplots(figsize=(20,8))

ax.scatter(results.label, results.Index,c=results.label)

ax.set_title("KMeans Clustering Results")

i=0

for txt in results.Item_name:

ax.annotate(txt, (results.label.iloc[i], results.Index.iloc[i]))

i=i+1

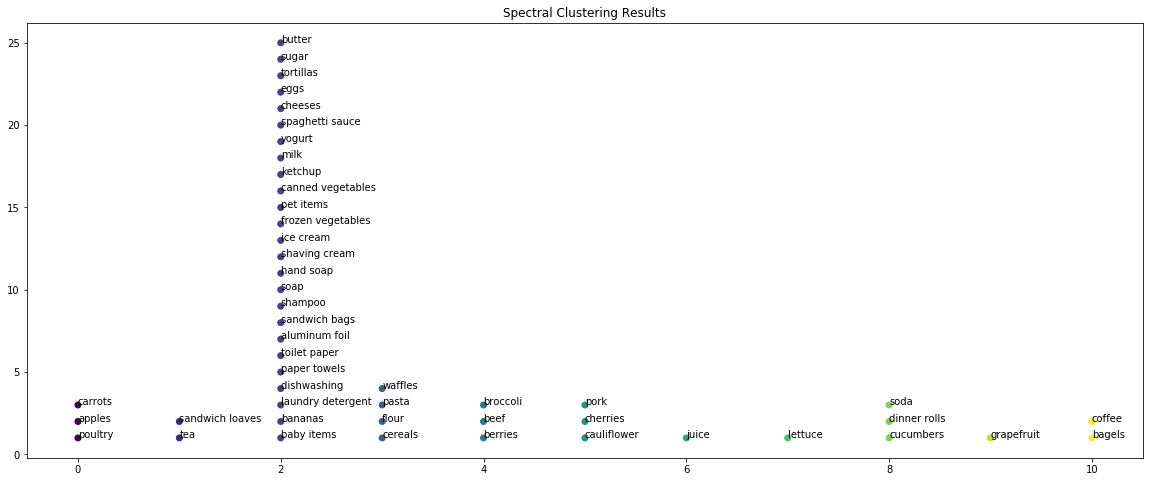

fig, ax = plt.subplots(figsize=(20,8))

ax.scatter(results.label, results.Index,c=results.label)

ax.set_title("Spectral Clustering Results")

i=0

for txt in results.Item_name:

ax.annotate(txt, (results.label.iloc[i], results.Index.iloc[i]))

i=i+1

Conclusion

**It turns out KMeans and Spectal Clustering work better. **

We can suggest some co-purchase items to be placed nearby for customers’ convenience:

- coffe+bagels

- broccoli+beef+berries

- soda+dinner rolls

- pork+cherries+cauliflower

It gives us some hints that we can put items together with their dishes. For example, people are likely to cook broccoli and beef together, then we will put them closely. Then, people will more likely to grab them together.